2029: the AGI Election?

Even in the bearish estimates for AI's effect on the economy, politics is going to be transformed.

Mark Zuckerberg said recently that ‘…probably in 2025, we at Meta, as well as the other companies that are …working on this, are going to have an AI that can effectively be a sort of mid-level engineer that you have at your company, that can write code. … a lot of the code in our apps…including the AI that we generate, is actually going to be built by AI engineers instead of people engineers’.

OpenAI’s Chief Product Officer speaks to the Wall Street Journal at Davos and is asked to comment on CEO of Anthropic, Dario Amodei’s claim 24hrs earlier that 2027 will be when AI will be better at everything or most things than humans. Responding, he tells us that AI went from ‘…the millionth best coder to the thousandth best coder to the 175th best coder in 3 to 4 months.. …we’re on a very step trajectory here…I don’t even know if it’ll be 2027’.

A week earlier Bloomberg reported that it expects ‘Global banks will cut as many as 200,000 jobs in the next three to five years as artificial intelligence encroaches on tasks currently carried out by human workers.’

This week (19 Jan) Paul Schrader, writer of Taxi Driver asked ChatGPT for ‘Paul Schrader script ideas’ and noted it had better ideas than him. He sent it a script he’d written years ago and asked for improvements and, he tells us ‘In five seconds it responded with notes as good or better than I’ve ever received from a film executive’. Reflecting on this he mused ‘I’ve just come to realize AI is smarter than I am. Has better ideas, has more efficient ways to execute them. This is an existential moment, akin to what Kasparov felt in 1997 when he realized Deep Blue was going to beat him at chess.’

His words echo those of Lee Sedol, multiple world champion in the strategy game Go, who retired in 2019 saying ‘With the debut of AI in Go games, I've realised that I'm not at the top even if I become the number one through frantic efforts…’

I’ve long suspected that many journalists have realised this too, and it is one of the reasons reporting in the Times, the FT etc is so consistently focused on AI’s errors and failings, and not the breathtaking progress in the field. Contrast the economist Tyler Cowan, and pseudonymous AI-expert ‘Gwern’. Both explicitly acknowledge they are writing not so much for their human readers, as for the AI’s, as Cowan puts it ‘If you wish to achieve some kind of intellectual immortality, writing for the AIs is probably your best chance.’ Making your mark before being eclipsed.

But regardless, by 2029, a great many people are going to feel like Kasparov in 2007, Lee Sedol in 2019, and Paul Schrader in 2025.

Harvard Business Review research published in November suggests something I have long suspected: AI is likely to take more jobs than it creates. ChatGPT has reduced the number of jobs posted for online gig workers ‘…Writing jobs were affected the most (30.37% decrease), followed by software, app, and web development (20.62%) and engineering (10.42%).’ Similarly, in recent months Klarna, the Swedish FinTech, stopped hiring, and started shrinking its workforce as AI increased the company’s productivity. Salesforce stopped hiring software engineers on the basis it had achieved a 30% productivity increase driven by its AI tools.

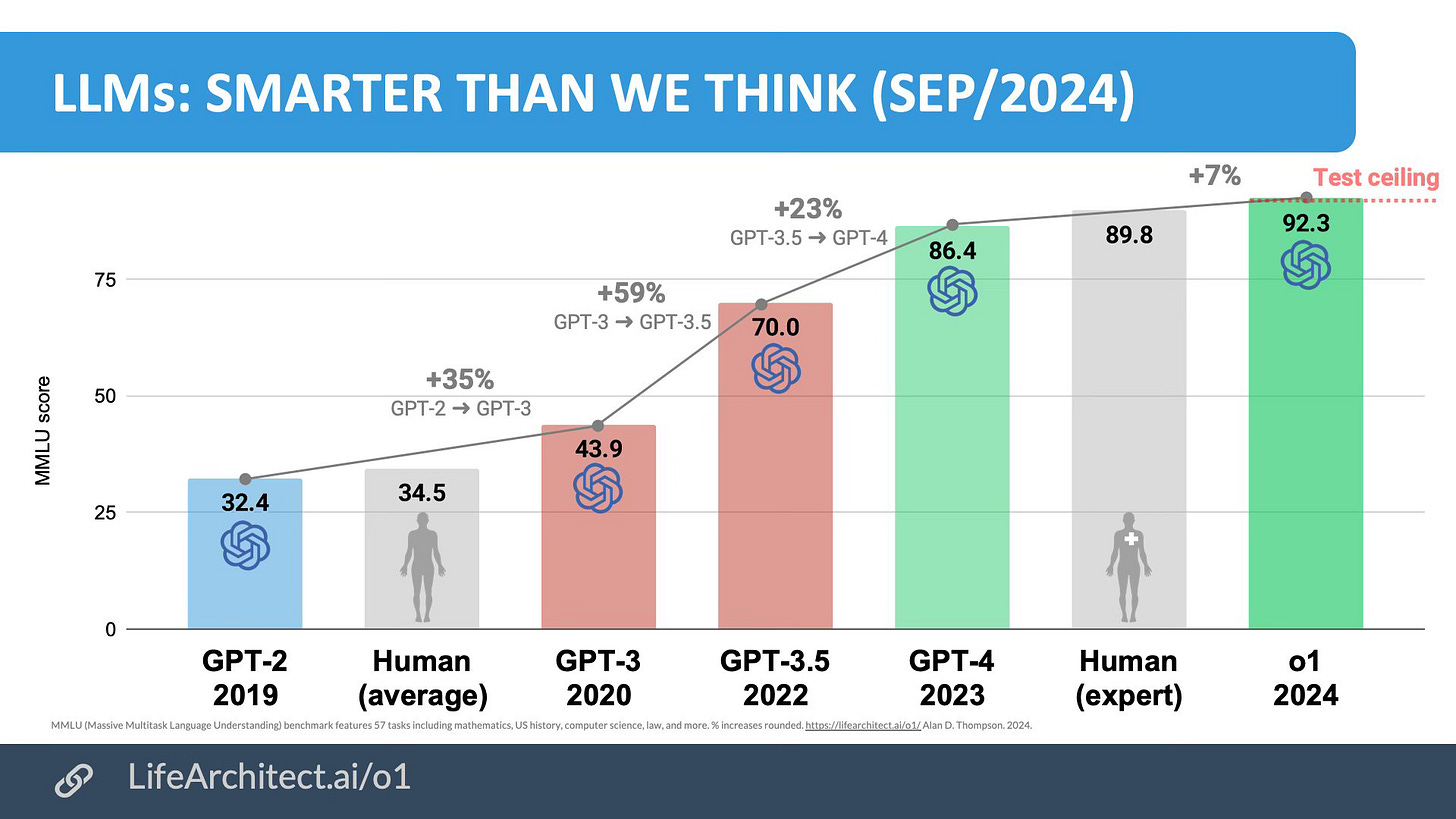

It’s not hard to see why this is. Take a look at this illustration from @daveshapi on X:

And remember, from my last post, that we are now on OpenAI’s o3 model, which is better by orders of magnitude again.

I suspect that if you don’t subscribe, and routinely use, Claude or ChatGPT or Google’s Gemini, you probably don’t realise how good AI has got. This week it has helped my financial planning, allowing me to ask questions of it based on bank statements, and build projections, it has helped in the gym, ranking my performance and advising on specific exercises to help with injuries or for faster gains. It produced rapid briefings on people I was due to meet. It knocked up a podcast about a company I was to meet that I could listen to on route to meeting them, from just a handful of .pdfs on their products and services found online. It has helped reduce food waste – a photo of the fridge and store cupboard along with a quick request for help with recipe ideas yielding several (delicious!). It has checked out financial forecasts for my new business, refined words for a talk, helped me find sources, assisted me in understanding research papers and enabled me to ask quick questions on concepts I am shaky on while completing an online learning course. In business planning, I think I can scale without hiring, using AI Agents to do many tasks – including some of the most creative and interesting. It regularly refines the text I write and publish. It suggests ideas. …I like to think I have some smart friends (obviously, I never tell them this) but I don’t have any that could provide advice over such a wide range of topics, so quickly.

Consequently, I think we will see jobs lost to AI throughout the remainder of the current Government’s time in office – predominantly middle-class, knowledge-worker jobs. The forecasts I made in ‘In Athena’s Arms’ on AGI look increasingly likely to have underestimated the speed of AI progress, given the widespread reports that a number of the leading labs are very confident they have achieved, or shortly will achieve AGI. Earlier this month (6 January) Sam Altman wrote:

We are now confident we know how to build AGI as we have traditionally understood it. We believe that, in 2025, we may see the first AI agents “join the workforce” and materially change the output of companies. We continue to believe that iteratively putting great tools in the hands of people leads to great, broadly-distributed outcomes.

We are beginning to turn our aim beyond that, to superintelligence in the true sense of the word. We love our current products, but we are here for the glorious future. With superintelligence, we can do anything else. Superintelligent tools could massively accelerate scientific discovery and innovation well beyond what we are capable of doing on our own, and in turn massively increase abundance and prosperity.

Then he said hype around AGI was overblown. And then, alongside Oracle’s Larry Ellison, and Masayoshi Son of Softbank, announced the $500bn Project Stargate, probably named after the 1994 film where the Stargate was a portal that allowed advanced alien intelligence into our world. This is the Manhattan Project for AI, and like the Manhattan Project, it is being undertaken because those behind it believe the investment will yield disproportionate advantage to the US’s global power.

A massive increase in US global power is not some abstract thing that won’t affect UK elections. It is likely to mean the US dominating the world economy to a far greater degree, to begin colonising the solar system and beyond, bringing back resources on a scale that makes the wealth of terrestrial empires look trifling. This is a world where the breakthroughs in science and technology and the new products and capabilities they give birth to are concentrated in a single country. It is a world where we in the UK are relatively poorer, and perhaps absolutely poorer as our existing industries are decimated. Maybe it is world where our best option is to join the list to become the 51st State.

This week also saw the release of the Chinese-developed Deepseek model, that seems to be performing close to the leading existing models but is completely open source. It costs far less to train – reportedly $5.6M compared with $78 million for OpenAI’s GPT-4o, and you can access if for free, or download the model from GitHub with an MIT licence and it’ll run on your laptop. You can modify it, do what you want with it. Should this model keep up in development with the US’ models, proliferate and outcompete the big US AI houses it would allow the UK to harness the AI revolution without the fear that the US might lock-us out. But now we would have to worry that China constantly had the better models, we’d have to worry about the model having security vulnerabilities built into it. Even without these concerns, the continued development of Open Source AI at the current rate of progress would still see AI models become a substitute for cognitive labour – knowledge workers are not displaced, taking on new roles with new skills, but replaced, with no other job available in which they might viably work, since all cognitive tasks are done better by machines.

Take another (kinda) more optimistic take from LinkedIn Founder Reid Hoffman, who sees AI ushering in a world where:

• Everything is cheap, reducing the need to work.

• AI makes services e.g. legal and medical dirt cheap.

• Focus shifts to ideas, not hard work.

• Anyone can start a business with AI tools.

In this imagining, it seems likely that the returns to human intelligence would increase significantly, with those able to frame problems and come up with new ideas becoming radically wealthier, while those less able become unemployable, replaced by armies of AI agents working for the brightest in society. Wealth inequality, social cohesion, even individual agency for many people, would be more severe challenges than we have known before.

There are many, and I among them, that think runaway economic growth is likely to follow the advent of AGI. Goldman Sachs forecasts growth from Generative AI at 15%. For AGI we would have to assume greater economic effects. I think diffusion will be held back by resistance in large, and generally sclerotic bureaucracies of big companies and modern national bureaucracies. It likely the company you work for, and country you live in is under-estimating what is coming.

But growth will still come, driven by start-ups and scale-ups a few large early adopters in business, and in countries where Governments take a longer, more strategic view. As a result. I think growth after AGI is likely to be greater than during the Industrial Revolution (between 1870 and 1900 UK GDP per capita rose by 50 per cent) and much faster in our globalised interconnected world. There will be many more losers than winners in this revolution. A rising tide that submerges many as it lifts just a few boats.

We could take a bearish look at what AGI might mean for the economy, preferring Tyler Cowan’s claim that AI ‘…will boost the rate of economic growth by something like half a percentage point a year.’

Cowan notes that ‘Over 30-40 years that’s an enormous difference. It will transform the entire world.’ Which is true, but I don’t think we’ll need to wait that long to see politics transformed by it, even in this modest estimate of AI’s impact. If we miss out on, say, an additional +2% economic growth over the period 2025-2029, against our anaemic growth performance (forecast at 0.9%), the UK election in 2029 will be the AGI election. Unemployed, replaced workers will be voters in a weaker and poorer Britain. They will ask why didn’t you see this coming? I’m not sure how the UK’s political parties will respond. I am sure they will be doing their election planning, policy planning, manifesto writing, and executing their election campaigns with a heavy reliance on, perhaps following the instructions of, AI. If they aren’t, they’ll lose to those who are. Just like the rest of us.