In Athena’s Arms: War, Wisdom & AGI in the U.K. Defence Review

What might taking Artificial General Intelligence (AGI) seriously in the UK Defence Review mean in practice?

How Far Away is AGI?

Sam Altman tells us AGI might be with us in “a few thousands days”. Dario Amodei (CEO of Anthropic AI), says he thinks “…it could come as early as 2026…”.

For me, these suggestions ring true. The accelerating rate and direction of progress suggest AGI will be with us far earlier than many think. Something would have to change in either rate or direction for it to take longer than the 26 years between now and 2050 – the planning horizon for the UK’s Defence Review. We would have to hit a hard limit – and so far every ‘AI will never…’ bet has been proven wrong. So much so we are having to invent new benchmarks all the time. There’s an effort right now to devise ‘Humanity’s Last Exam’, a kind of Rubicon test for AI, cross it, and we know Rome will fall. There’s no going back.

Since I co-wrote Don’t Blink with Rob, “AI” or those behind it, has won two Nobel prizes, Deepmind’s Demis Hassabis for chemistry and Computer Scientist – or as the BBC offers ‘Godfather of AI’ - Geoff Hinton for physics. Can you imagine the derision if you had suggested this was likely to happen even as late as 2020? There was a long period when Deepmind was being criticised for not having produced anything useful – just cracking games.

In a press conference after receiving news of the award, Hinton said ‘..most of the top researchers I know believe that AI will become more intelligent than people. They vary on the timescales. A lot of them believe that will happen sometime in the next 20-years..AI will become more intelligent than us. And we need to think hard about what happens then.’

Given intelligence training and background, my instinct is to rely on Metaculus’ median forecasts as best practice. But if I am more honest with myself and you, the truth is I think these are probably bearish. I have laid out these crowd sourced median Metaculus forecasts many times: AGI by 2027, Oracle AGI by 2029, ASI by 2032.[1]

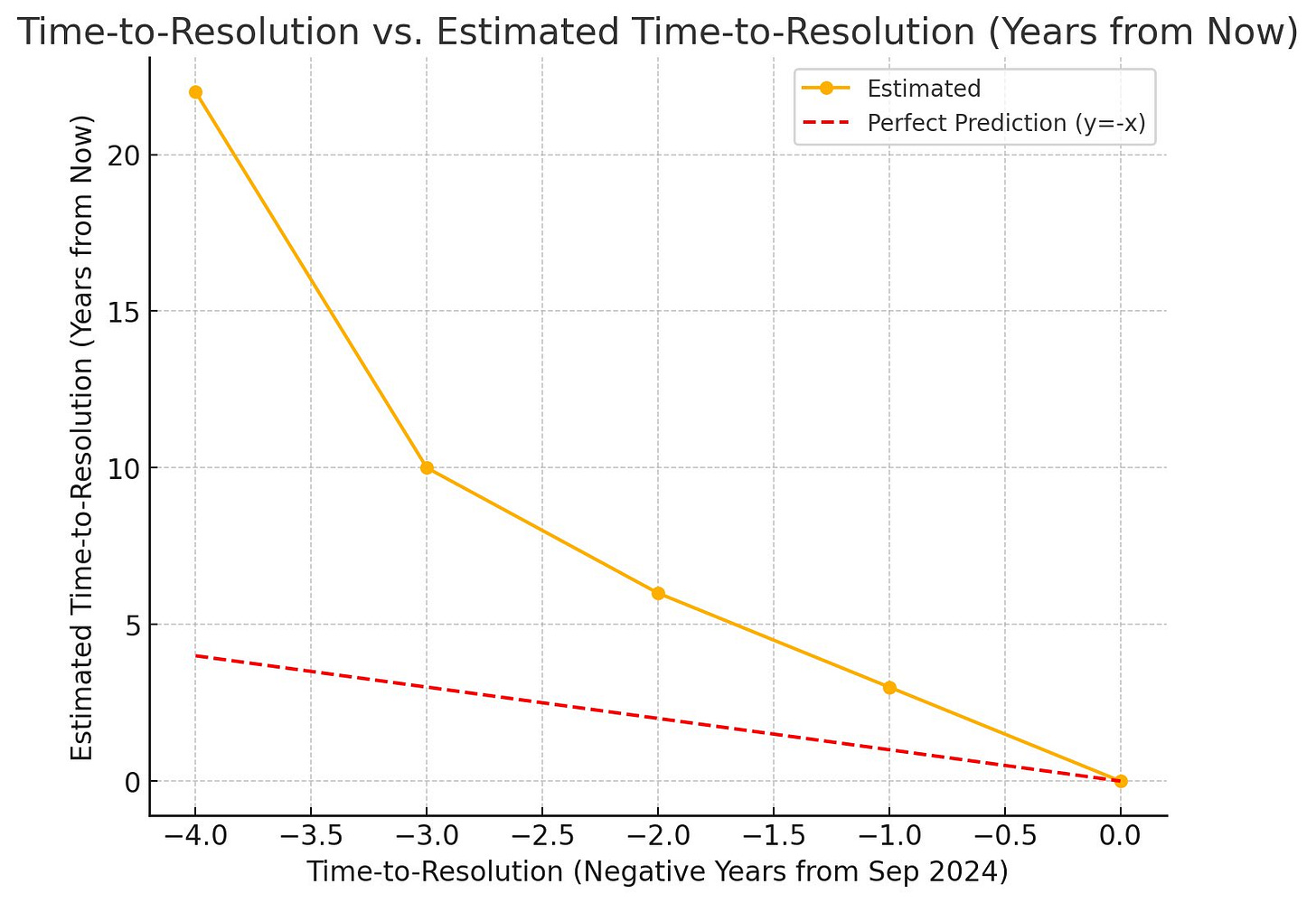

But like most AI forecasts they are all trending closer to the present, which I think we should weight. Here’s an example. On 5 March 2020, a Metaculus question was posted asking ‘When will an AI achieve a 98th percentile score or higher in a Mensa admission test?’ Here’s the median forecasts that followed[2]:

· Sept. 2020: 2042 (22 years away)

· Sept. 2021: 2031 (10 years away)

· Sept. 2022: 2028 (6 years away)

· Sept. 2023: 2026 (3 years away)

· Achieved: September 12, 2024[3]

This graph from @Fush on X shows how far out the forecasts were from the date they were posted to resolution of the question.

Similarly, another set of AI-expert forecasts from 2023 estimate ‘Human Level Machine Intelligence’ (aka Weak AGI) at a 50% probability by 2040, “down thirteen years from 2060 in the 2022…[survey]”.[4] That’s a big recalibration. It’s to 2040, not the Review’s timetable of 2050. And it’s 12-months out of date: we don’t have the 2024 updated forecast yet.

Another neat way of looking at this is a Metaculus forecast, which reverses the approach, asking ‘Will there be human-machine intelligence parity before 2040?’ which is kinda like asking you to bet against AGI by that date. Read this way, currently 98% of forecasters believe we will have AGI by 2040. Just 2% forecast we will not. How does 98/2 shift if we give it >60% more time and ask for the probability of AGI to the Review’s 2050?

New information could push things out further – we might learn that the Scaling Laws[5] for AI models have hard limits, or perhaps we will find one of those ‘AI will never…’ benchmarks and watch it prove unsurpassable. Maybe AI recursive self-improvement – AI training better AI – proves impossible. But none of these things seem likely, and progress is speeding up, not slowing down. Those ‘straight lines on graphs’ suggest to me that AGI will likely (60%) be with us by 2026, and highly likely (85%) by 2050, which the publicly accessible call for evidence suggested was the timeframe for the UK Defence Review.

So for that reason, I take a look here at what making AI the #1 Priority in the Review would mean in practice, if you accepted that within the timespan of the review, we would achieve AGI.

What does the MOD think?

It would be great to see the logic of MOD prioritisation or de-prioritisation of AI, against their own recommended best-practice (probabilistic forecasts – see the Defence Concepts & Doctrine Centre’s (DCDC’s) JDP 2-00 Intelligence… p. 64, para 3.47).

It’s hard to know what the MOD thinks at the moment. DCDC’s September 2024 publication, Global Strategic Trends to 2055, forecasts against the arrival of AGI by 2055, not directly, but by describing a world to that timeframe where AI enables many things to be ‘increasingly automated’ but no more, and in the section devoted to AI, acknowledging only that it will likely outperform humans in image based tasks (by 2030), but with no forecast on AGI, nor serious discussion of its implications. The Review team should rectify this in their publication.

The Review might conclude against making AI #1 Priority. But if they can help us all understand why they have reached that conclusion if would be a great help in furthering the discussion – not least within Defence. It would get us beyond what is often apparently muddled thinking and a rhetoric/reality gap[6], to something more coherent. Whereas if they conclude there is, idk, a 20% chance we could get there in the timeframe of the Review, it should spark a really serious debate about how Defence prepares for that world.

But what if the Review team didn’t bet against the broad consensus, and accepted we are highly likely to reach AGI within the lifetime of the review, by 2050?

I offer some first thoughts on this below. I suggest most readers skip to this section.

That said, I wrote the next ‘Caveats’ section below in part as reflection of my own nerves about how those recommendations will be received. If you’re inclined to ridicule them, can you please read this section first?!

Caveats

Caveat 1. To argue we are highly likely (85%) to see AGI in the timeframe of the Review, I use probabilistic forecasts: intelligence best practice – but not millenarian declarations of deterministic certainty. It might not happen, and we won’t be left gazing skyward like a cargo cult, forever waiting for a sign. Instead, we use these projections as a guide—an informed, evolving framework for action, a principled approach to balancing risk, opportunity and uncertainty.

I am asking you, the reader, and the Review team, to think like intelligence officers, who, as General Colin Powell asked, must “Tell me what you know. Tell me what you don't know. Then tell me what you think. Always distinguish which is which.”

Caveat 2. I don’t think the MOD will come anywhere close to implementing any of the things suggested below. If we get no probabilistic forecast from the MOD I think we can infer, in actions and prioritisation to date, that the MOD is in the 2%, believing humans will remain superior in most domains not just until 2040, but beyond 2050.

Caveat 3. There are a great many pressing issues the MOD needs to address. Being the #1 priority would not make it the only priority, in the same way that our nuclear deterrent being (arguably) our #1 priority today, doesn’t make other things unimportant. For example, industrial capacity would, for me, be a close second to AI. Though the two are linked, as I described at Farnborough Air Show this year. It need not detract from prioritising lethality – indeed what could be more lethal than being able to constantly outthink your enemy in planning and execution from Grand Strategic to micro-tactical? Nor does it mean we wouldn’t be spending on military equipment. I lay this caveat out to avoid those that disagree with the argument that AI should be the #1 priority creating a straw argument by suggesting that my saying so means I think X or Y doesn’t matter. That’s not the case. I just think everything else matters less than AI that is smarter than everyone that ever lived.

Caveat 4. A final caveat: to implement the recommendations below would require taking risk in multiple areas. Some of those, it seems to me, are very reasonable. But there is no public pressure on this issue, there is far more political, social, and career risk involved in arguing for making AI the #1 priority than there is in avoiding the issue or arguing against it. There is also the risk of betting big on AI and then seeing progress plateau, the investment wasted. Given how far behind the adoption curve Defence is, #1 prioritisation still makes sense against the AI we have today. It was against this Rob & I offered our more conservative recommendations in Don’t Blink. What follows are what you might do if you accepted the consensus forecasts that AGI & ASI will come in the timeframe of the review, and responded proportionately.

If AI was the #1 Priority

So, caveats done: what would UK Defence do if it accepted the median estimates for AGI and ASI (2027 and 2032), thought through the implications of this fully, and acted accordingly?

1. Energy. Immediately begin working to get approval from across Government to get much of its estate pre-approved for the deployment of Rolls Royce’s small modular nuclear reactors (SMRs) to power our race to develop AGI & ASI.

2. Target Prioritisation. Following the logic that:

…if it is cognitive advantage, applied to how you prepare for, and fight, your future wars, that determines victory (see my talk here (2019) and in writing here and here).

…And if, AI is going to give consistent cognitive advantage, in pre-war planning to deter, and in-war, strategic, operational and tactical execution.

…Then, the most important targets for our adversary will be our AI infrastructure.

Therefore, UK Defence would start building our SMRs underground, in hardened bunkers – some of course already exist, and perhaps could be repurposed. But if not they must be built new.

And we would start planning to destroy our adversaries AI infrastructure above all else.

3. Data & Compute. We would build our data centres and supercomputers underground too, to give us access to unprecedented amounts of compute. We would bring our existing data together with far more urgency and far more resources than we have to date (there are efforts, but always very small scale, with tiny teams). We would start building the virtual worlds that can simulate battle as accurately as possible, to start generating the synthetic data we need to train future models at all levels of warfare. We’d be focused on getting live feeds to those models, to update and correct errors rapidly.

4. Reprioritise Spending. We would see that Government spending is woefully misaligned with the threat and opportunity AI presents. The MOD’s budget is £57.1Bn a year. It spends just $4.4Bn of that on Digital ~8%, and a tiny fraction of that on AI.

The Manhattan Project cost ~$30Bn (£22.5Bn) in today’s money. Yet the threat and opportunity from and in AI is far greater – not just the vast destructive power of hugely powerful bombs, but unprecedented scientific, technological, diplomatic and economic power. Nuclear weapons, ICBMs, nuclear submarines, are the product of intelligence. What defences and offensive weapons and ways of war might Amodei’s ‘country of geniuses in a data center’ produce?

If, as the forecasts suggest, AGI and ASI could be here within the timeframe of the Review, and perhaps within two- to three-years of its conclusion, it would quickly render existing equipment and plans obsolete (through scientific runaways, innovation escape velocity, vastly better planning and execution, uncrewed systems outperforming all crewed systems etc), then even 8% all on AI is not enough. AI spending should be at least commensurate with the private sector. I hear you gasp at the suggestion!

Let’s make the claim more tangible. Deepmind is said to cost Google around £650M[7] per year. Is expecting the MoD to spend the same, 1.14% of its budget, on a similar capability really too much, against the threat and opportunity we face? Realistically, 10% annually, £5.71bn is probably still not proportionate to the threat/opportunity.

I think this also gives the answer to those who tell me we can’t afford to compete with the big AI labs. When you hear numbers banded about, like Google or Microsoft spending $100Bn (£74Bn) on AI, note the caveat ‘over time’. The MOD spends $100Bn every 1.4-years. Over 10-years, it could match Google or Microsoft if it wanted, spending on AI 14.6% of its budget each year to do so. We could afford to compete, and at relatively lower cost than many things of lower utility that we are already spending money on.[8]

If we accepted AGI was likely to arrive within the timeframe of the review, we’d abandon human ego-centric and hubristic notions that man will remain the dominant force on the battlefield, and go all in on uncrewed systems now – reprioritising spending accordingly – the jargonistic RAS Strategy (Robotic and Autonomous Systems) would not be sideshow to the equipment plan: it would be the equipment plan.

5. Network Protection & Redundancy. The cutting of communication lines to Shetland in 2022, is said to have been almost certainly conducted by a Russian ship or submersible. If AI, AGI, or ASI become critical to the UK’s chances of victory in war, as I have argued elsewhere that they will, we can expect similar efforts to isolate our AI systems through physical, cyber and electronic warfare attack.

This may be far harder to do in practice. One of the concerns with AGI and ASI is that it might ‘escape’ to stop humans from turning it off (indeed Max Tegmark starts his brilliant 2018 book on AI, Life 3.0, with a fictional description of how this could happen). So also an AGI or ASI, even one that remained servant and not master to its creators, might feasibly ‘escape’ – perhaps to compute infrastructure in space, elsewhere in the globe, people’s home computers – if (e.g.) Russia were to try to cut it off.

Nevertheless, if the UK were to make AI the #1 priority in the review, we would make communications redundancy, protection of satellites and undersea cables and other data links, a high priority too. We would consider where and how we might build deep underground, or deep space connectivity to our allies, so that we could keep our AI coordinating with theirs, should existing connections be cut.

6. Automating R&D. If, as I argue here and here, we will link frontline to factory, with sensors flowing back information to the front straight into ‘self-driving labs’ that send their findings at superhuman speeds into automated factories, or instructions for 3D printing and digital fabrication, then these facilities will be the target of attack. They too would have to be underground and/or dispersed.

7. Nuclear forces. AGI or ASI would offer such a decisive advantage in conventional war fought against a nation that did not have it, that nuclear war would be far more likely. As such, nuclear risks would increase, and the need for the deterrent – and de-escalatory diplomacy - with it. If the Strategic Defence Initiative, Reagan’s ‘Star Wars’ programme, was destabilising, as critics claim, how much more so developing a strategic and command brain your adversary knows is far smarter than they can hope to be?

8. Organisational Structures & Leadership: an AI Manhattan Project. Truly making AI the #1 priority would be an AI Manhattan Project, led by our most effective and demanding leaders, involving our most talented military personnel and scientists, working side-by-side. It could not succeed in our existing military bureaucracy – which the current Secretary of State for Defence acknowledged needed major reform in opposition, but which the system will likely resist – it would need a new organisation, under a single leader, taking in the greatly expanded budget and held accountable for delivering the results we need.

9. HR & Education. If we made AI #1 priority in the Review, there would need to be surge in training at all levels across Defence, to understand AI and its potential. Many more Fellowships in AI would be offered, many outside the UK, principally at Universities in the US that are really leading the field. Perhaps also to other allied nations, like Australia, Japan, Canada, to deepen relationships and collaboration. Defence would make Artificial Intelligence Consultant, its own career field, training people to have deep expertise in AI, to inform commanders and oversee plans to integrate AI everywhere.

Zig-Zag careers, drawing back in those that leave defence and have valuable expertise in the technology, would become the norm. There would a huge effort to attract the world’s leading AI researchers, to work in those rapidly developed underground AI and energy facilities.

10. R&D & Innovation. The pursuit of fully automated R&D, innovation, and capability development and deployment pipelines would be urgent, alongside efforts to develop recursively self-improving AI. Defence would bring in metascience experts, coaches, the world’s leading organisational psychologists, to make sure the talent they attracted worked in an environment most conductive to delivering the breakthroughs we need. What would win future wars would be the speed and effectiveness of innovation systems.

11. AI Intelligence Briefings. Across the Armed Forces and at the highest levels of Government, there would be regular intelligence briefings on AI developments the world over. Which new benchmark was met or blasted through this week? Who is investing what, where, when, how much? What might it mean for our race to lead in AI?

12. AI Alliances. We would form AI Alliances, agreeing to share IP and enable mutual inspections to make sure no nation is cheating (the incentives to do so, given the vast increase in national power that winning the AGI or ASI race would give a nation, could make even the staunchest ally defect, if it saw a way to do so – the Regan principle ‘trust but verify’ must be a core component of the alliance), and racing together to have some chance of keeping up with authoritarian nations, who can more easily trade their people’s prosperity today, for greater power tomorrow, than democracies with their messy compromises and short-termism.

13. AI Safety. If the MOD does start to act as if AI was its #1 priority, with the actions described above, it would have created environments in which AI models could be more safely tested: underground, air gapped from the world. This wouldn’t protect engineers and workers from Ex Machina-style manipulation. But it would be considerably better than the development and testing currently being done in the civilian labs of the big AI companies, where security is reportedly poor, and safety an afterthought – more marketing than meaningful constraint.

14. Economic Planning. Were the UK to do all this, and be the first to AGI & ASI as result, and assuming AI remained the servant, and not the master, it would usher in a world of unprecedented prosperity (see Bostrom’s Deeptopia) as well as asking some profound philosophical questions about the meaning of life. If we put those aside to concentrate on the security implications – were the UK to invest as described, and get there first, the UK’ relative power in the international system would be unprecedented. Our economic growth breakneck. If our AGI were developed as described, safely underground with tight security controls, figuring out how to act on any advice provided would be a key question. But then, I suppose, it would be one we could ask our oracular AI.

AGI Cognitive Dissonance

To end, I wanted to reflect on the way most such conversations are received. Many seem very willing to acknowledge the rate and direction of progress, how consistently predictions that AI will never have been proved wrong. Most are willing to accept crowd-sourced forecasts are generally best practice. Few are, when pushed, willing to bet those ‘straight lines on graphs’ will suddenly plateau. But most I think, until recently including me, have a hard time truly internalising just how close to AGI we might be. Few seem to be changing their behaviour or priorities, even amongst many of us that say we accept the forecasts are probably about right. I think there is a kind of AGI cognitive dissonance here, giving rise to AGI psychological avoidance - people find a way not to think about it.

One of the reasons forcing on yourself the discipline of making your own probabilistic forecast or date, along with laying out, at least mentally, the logic and evidence from which it has been derived is so useful, is that it doesn’t allow you to slip out the side gate, dismissing all this as too scary and a bit sci-fi. The truth is, it is a bit scary and bit sci-fi. But if the broad AGI forecasting consensus is correct, our future prosperity and security as individuals and as nation, may be determined by what we do in the weeks, months, or years immediately ahead.

Moreover, if China’s efforts to hack OpenAI (reportedly successful in 2023, thwarted 9 Oct 2024) succeed, and if the Chinese Communist Party or PLA allocate resources as I’ve suggested we might, the implications will be far more alarming.

A world shaped by a CCP-controlled AGI would be much more terrifying than grappling with our own AGI-related cognitive dissonance. A world where even an ally had such power, and we didn’t, may not be a comfortable one. We must overcome AGI psychological avoidance, engage with the risks and threats, think clearly. Plan accordingly.

[1] Again – see Don’t Blink.

[2] Neatly summarised on X by @jgaltTweets

[3] See also: https://trackingai.org/IQ

[4] Grace, K., Sandkühler, J.F., Stewart, H., Thomas, S., Weinstein-Raun, B., and Brauner, J., 2023. 2023 Expert Survey on Progress in AI. AI Impacts Wiki. Available at: https://wiki.aiimpacts.org/ai_timelines/predictions_of_human-level_ai_timelines/ai_timeline_surveys/2023_expert_survey_on_progress_in_ai [Accessed 15 September 2024].

[5] See https://arxiv.org/pdf/2001.08361 & https://arxiv.org/pdf/2203.15556.

[6] See “Don’t Blink”: https://cassiai.substack.com/p/dont-blink-how-the-uk-can-take-a

[7] Adjusting 2022 figures for inflation, to 2024.

[8] To achieve a total expenditure of $100 billion over 10 years, starting with a budget of £57.1 billion, an estimated annual expenditure of 14.6% of the initial budget is required. This calculation assumes a constant exchange rate of £1 = $1.20 and accounts for an annual inflation rate of 2%.