Eyes Wide Shut. AGI in Plain Sight.

How much longer can we rearrange the deckchairs as the AI iceberg looms larger?

You won’t read this in the Times. If you are not on X, you might not even know it. But yesterday, OpenAI released it’s o3 model, and achieved 76% on the ARC-AGI (Abstraction Reasoning Corpus-Artificial General Intelligence) test. This is a series of tests deliberately designed as ‘the only formal benchmark of AGI progress’. In this conception AGI is one able to efficiently acquire new skills and solve open-ended problems. ARC was designed by those who believed LLMs would be unable to adapt to problems they hadn’t been trained on, couldn’t cope with novelty and relied on memorisation, rather than being able to reason a response. They distinguished between skill – the ability to learn to solve new problems, and task-specific intelligence – memorising the solution to a problem and then applying it. ARC was designed to test AI skill, ability to solve novel problems and to reason. Here’s how previous models had performed (via @kimmonismus on X) on the test, and how o3 now does:

As a result, many are claiming yesterday was the day AGI arrived. As Professor Ken Payne put it to Al Brown and I recently, the main sound one hears in response is the scrape of moving goalposts. No doubt many will find reasons to say this is not AGI. Francois Chollet acknowledged that this was a significant scientific breakthrough and step-change in AI capabilities, but also that

‘Passing ARC-AGI does not equate to achieving AGI, and, as a matter of fact, I don't think o3 is AGI yet. o3 still fails on some very easy tasks, indicating fundamental differences with human intelligence.’

He might be right. But that isn’t really the point.

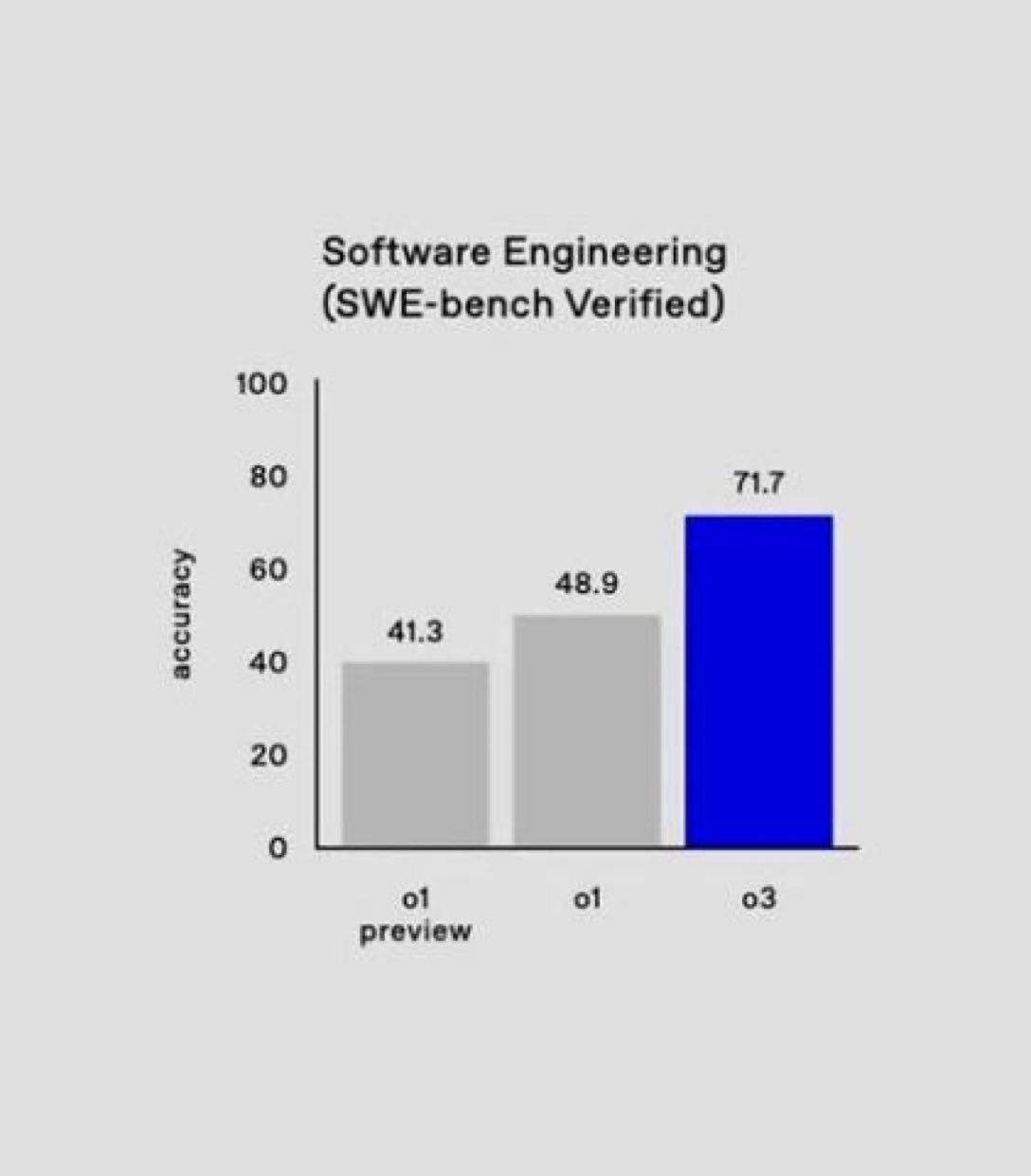

o3 has also radically improved AI’s performance on a number of other benchmarks over previous and rival models. For example, in software engineering (via @IterIntellectus on X):

…leading the same poster to ask what any knowledge worker should do now noting that ‘even if the model is $2000/month, it’s still cheaper than a graduate employee’.

o3 also solved 25% of insanely hard frontier maths problems, those that take many hours of intense, focused work from the leading mathematical minds, and on which previous AI models had solved less than 2%.

One OpenAI researcher described o3 as ‘bonkers good’ a ‘massive step up from o1 on every one of our hardest benchmarks’. No doubt I have not captured the full range of breakthroughs on benchmarks that o3 has achieved, and no doubt in any case that it will go further beyond the frontier of AI and human performance as it is tested more in the coming days, weeks and months.

Sam Altman has been quick to point out that not only is the model achieving these incredible results, it is doing so at a massive cost reduction – a trend he expects to continue. AI is not only getting better than you, it is becoming ever cheaper and more efficient than you.

This breakthrough came days after a paper was published showing that the previous o1 preview model had performed at superhuman level in differential diagnosis, diagnostic clinical reasoning, and management reasoning, across medical ‘…tasks that require complex critical thinking…’.

In August Deepmind released a robotic AI that could compete at human-level in table-tennis (winning 45% of games). In September Deepmind announced that its AlphaChip could now design semiconductor chip layouts at ‘superhuman’ levels. Earlier this month (December 2024) Deepmind showed its weather forecasting model GenCast outperformed all current models on the 15-day forecast and in predicting extreme weather events more accurately. Also this month, Deepmind released Veo2 outperforming OpenAI’s Sora in developing hyper-realistic video clips and began the roll-out of Project Mariner, an agent-based AI that can reason across your web browser, moving the cursor on your screen, clicking buttons, filling out forms – like tasking a human to do jobs on your computer for you.

If all that wasn’t enough, this month also saw the release of Genesis, a physics engine that allows robots to train in virtual environments – where one hour of training is the equivalent of 10-years training in the real-world. The breakthrough is remarkable and worthy of a post of its own, for example, given it shows that an “…AI can control 1,000 robots to perform 1 million skills in 1 billion different simulations, then it may 'just work' in our real world…” as one of the researchers that developed it commented. That this breakthrough is a virtual footnote in this article should tell you something. Can you feel the OOMs? (Orders of Magnitude).

In June this year, Leopold Aschenbrenner told us that AGI doesn’t require believing in science fiction, it requires believing in straight lines on graphs. In October, Rob Bassett Cross and I argued that the rate and direction of change was such that the UK Defence Review team might adopt the mantra ‘Don’t Blink‘ whenever discussing AI, as a way of responding to claims that ‘AI will never’ – such was the speed that AI benchmarks were being passed. That same month, I argued that the Review Team must make the arrival of AGI a planning assumption within the Review’s planning horizon of 2050, perhaps within the life of this parliament (2029), and that it would be an abrogation of its responsibilities if it did not include a rigorous forecast of when it expected AGI to arrive, with commensurate investment and action to the risk and opportunity presented. In many talks across the UK and US since, I have argued that ‘if you are not betting on AGI, you are betting against it’. I am far from a lone voice. We cannot say we did not know.

Meanwhile, the UK Strategic Defence Review continues, but the prevailing debate appears fixated on budgetary questions rather than strategic necessities. Lobbyists argue over the merits of acquiring specific assets—how many ships or F-35s are sufficient, the right number of tanks or artillery pieces, and even whether the UK should invest in a continental-style army (spoiler: it shouldn’t). For anyone attuned to the rapid, transformative pace of AI development, this debate feels like watching a government obsess over deckchairs as the iceberg looms. Not so much ‘Don’t Blink’ as ‘Eyes Wide Shut’.

Even if you accept that conventional munitions are with us for the foreseeable future, the enhancement of capability that AGI will provide will be extraordinary. If we seize it.

Happening fast and zero preparedness. Socio-economic and ontological shocks coming in the next 5 to 10 years.